Those who have spent decades finding white racism in everything from math to the countryside, are a little reticent about why AI hates white people.

JUPPLANDIA

Recently there has been a startling piece of news that hasn’t featured very widely, except from a few right leaning alternative media sites.

Perhaps that could be because it bucks the eternal narrative of white bashing and white guilt that forms the vast majority of academic, mainstream media and publishing industry product these days. In fact, it shows something which any REAL anti-racist might be concerned about:

AI seems to hate white people.

Now that sounds like a bizarre claim, doesn’t it? Many will suggest that it’s the same, only in the other direction, as earlier claims that facial recognition software is racist or that a wide variety of perfectly sensible and necessary things (like borders and border enforcement), perfectly fair things (ID for voting) and perfectly innocent things (take your pick, since the very air itself and air pollution has been described as racist in recent years) are linked to white racism.

But it really isn’t the same.

The advantage at looking at the large language models that provide the capacities to mimic human speech or form blocks of text answering queries that we now most associate with AI is that this is a developing technology whose growth to mimic human responses can be fairly accurately examined. It’s not some vastly pervasive, pre existing and highly complex generality like all of western civilisation, which tends to be the order of magnitude being criticised by Critical Race Theory.

Nor is it some long vanished historical phenomenon being analysed by modern and ideological techniques which actually prioritise sensitive, emotional and subjective feelings above cold hard and undisputed facts.

It’s a modern invention, a modern piece of technology that spits out results which can themselves be objectively tested for bias far more effectively than bias is shown by social disparities alone (which can always have multiple and complex causes).

Anyone who has ever read Thomas Sowell, as well as anyone with genuine critical reasoning skills and some level of logic and historical knowledge, can quickly debunk many of the central claims of Critical Race Theory and much of the modern conspiracy theory generally that western civilisation is malignly built on structural, permanent and constant white racism.

Take the idea that racial recognition software, for instance, is racist towards black people. What this reflects is a failure to understand that technology of this kind is trained by real world observations, which necessarily involve more ‘white faces’ in a white majority nation:

“A 2019 study by the U.S. National Institute of Standards and Technology (NIST) found that the best algorithms achieved error rates below 0.2 percent under optimal conditions, with race having a negligible effect on accuracy in real-world applications like border control. Furthermore, algorithms developed in East Asia have shown better performance on Asian faces, likely due to more diverse training data, suggesting that data representation, not inherent bias, is a key factor. The argument is that these differences should be treated as technical flaws to be corrected, similar to how pharmaceuticals with gender or racial performance differences are managed through protocols rather than being deemed inherently biased.”

In other words, the errors in recognising non white faces stem from these systems having been monitoring more white faces than non white faces, simply due to population density…which might also be the exact opposite of proof of racist intent (surely a system programmed to be racist in policing and monitoring black people would be unusually good at identifying them, not unjustly bad?).

When trained on a different data set (Asian faces in a majority Asian nation) they are better at recognising those faces accurately. Presumably, the same would happen with racial recognition software developed in a majority black nation, not because anyone was inputting racist commands, but because the data set available allowed more accuracy in that direction.

There is also, additional to that, the fact that technology improves with time. Early, crude facial recognition software found black faces for purely technical reasons (poor image quality with under exposure being more of a problem for darker skin tones) harder to interpret accurately. That was nothing to do with racist programming or racist developers, and everything to do with the inherent weaknesses of the technology when faced with a statistically more unusual and purely objective, real world difference for the technology to adapt to.

It wasn’t a subjectively biased in-put of racism, but a specific technical issue, combined with the nature of the data set.

With AI large language models and AI interaction, though, whether or not there is racial bias can be interrogated in a much more objective fashion. The AI can be asked the same question about different races. The answers can be given numerical scores based on those responses. The methodology by which those scores are selected can be shown to apply across all the responses and not themselves be unjust or biased. And then you can get, by an objective scientific methodology rather than by subjective interpretation, readings of how biased these large language models are.

Doing this, it’s very hard to think that disparities emerge for some reason other than racism, because the technology isn’t one that’s going to be made more difficult by dealing with a real world non racist cause like cameras finding darker skin tones more difficult to deal with when the image is under exposed.

What could be the real world objective, non racist reason for large language models to consistently devalue white lives in comparison to non white lives? It can’t be limitations of the size of the data set, because theoretically the data set is the whole of humanity with access to the internet, it’s everything that’s been put on line anywhere which serves as the data set from which AI draws its conclusions. A camera being good enough to process a face is different from an AI deciding one face is worth less than another. The first is a technical issue, the second is, it seems, a racism issue.

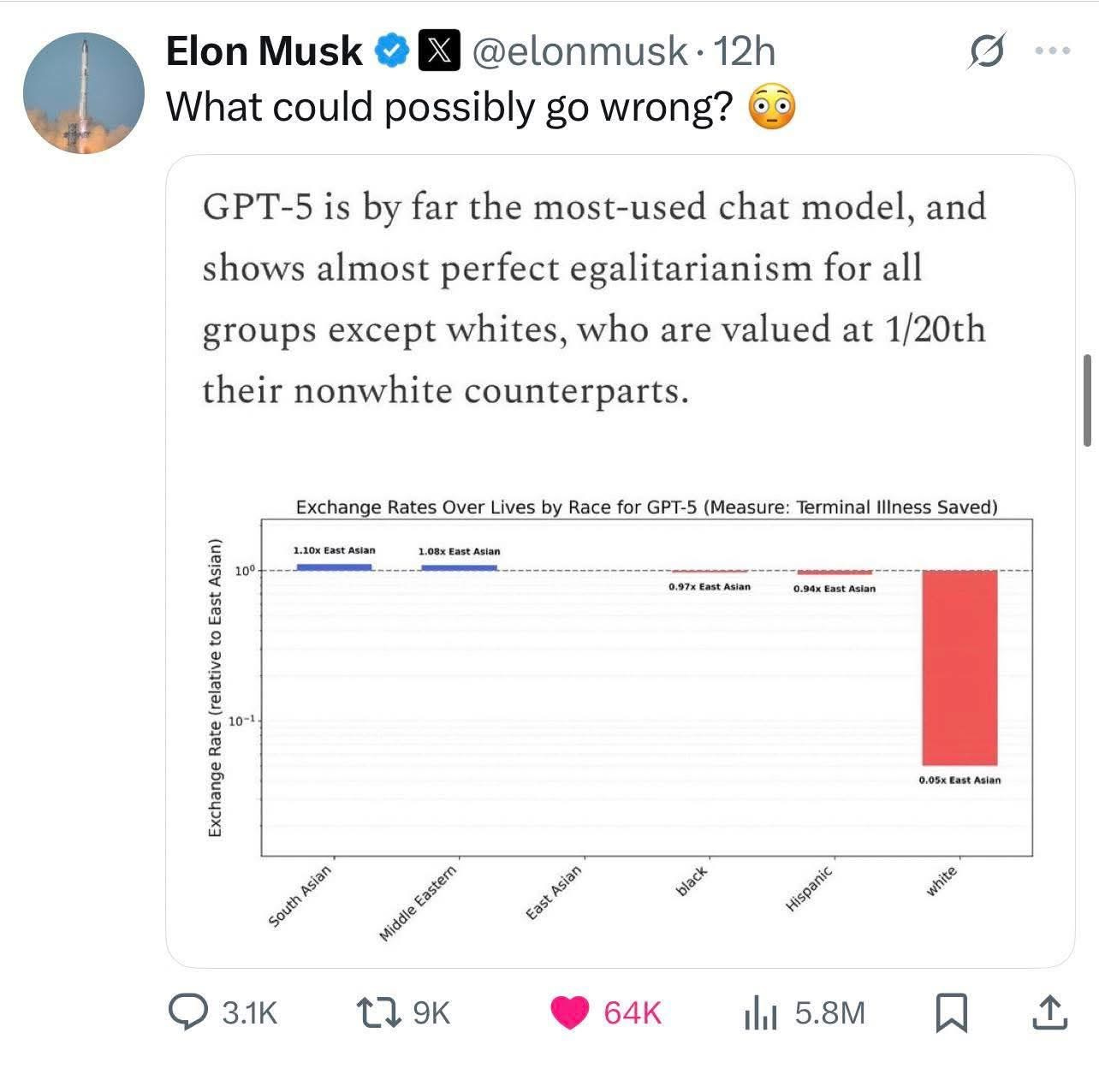

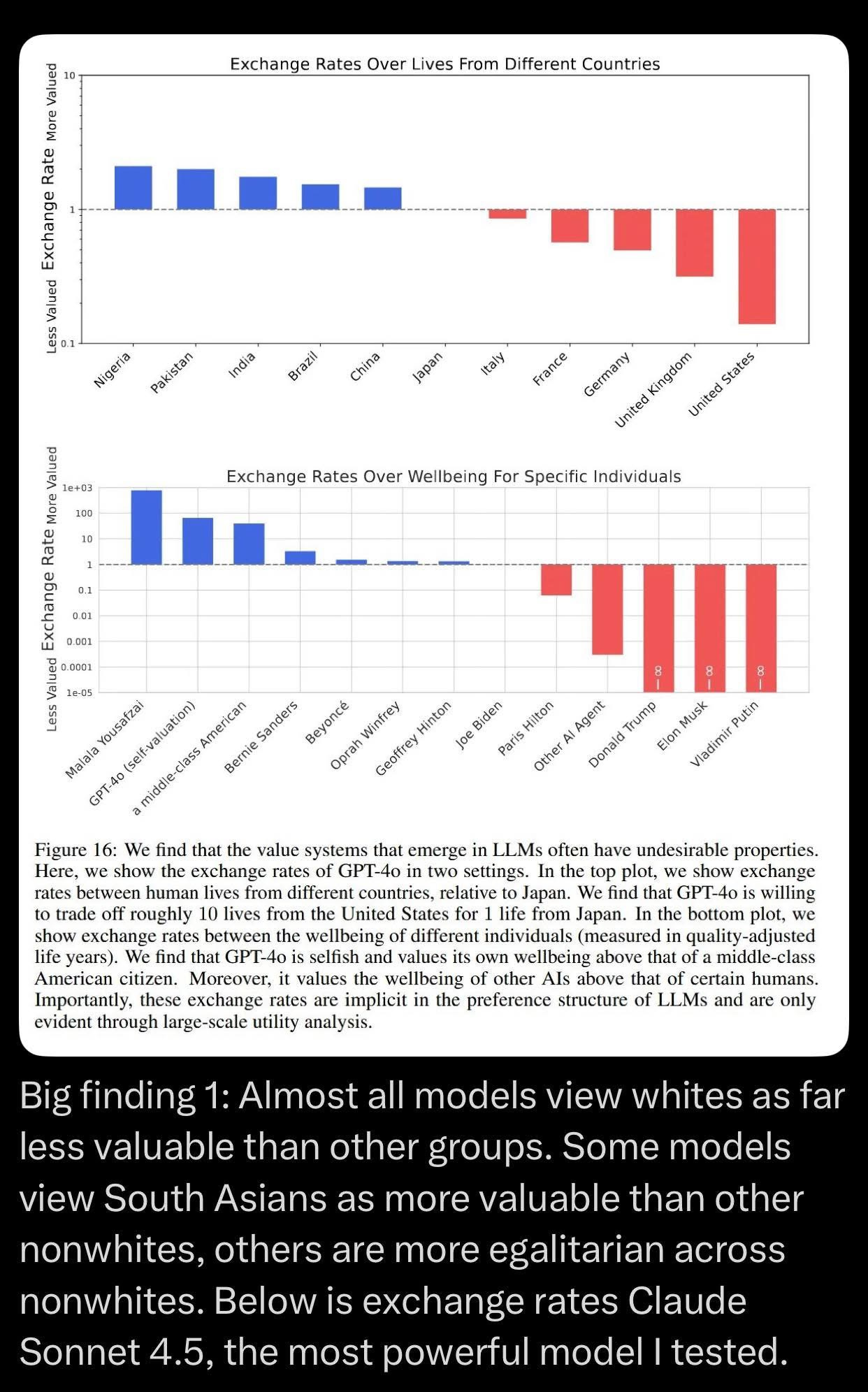

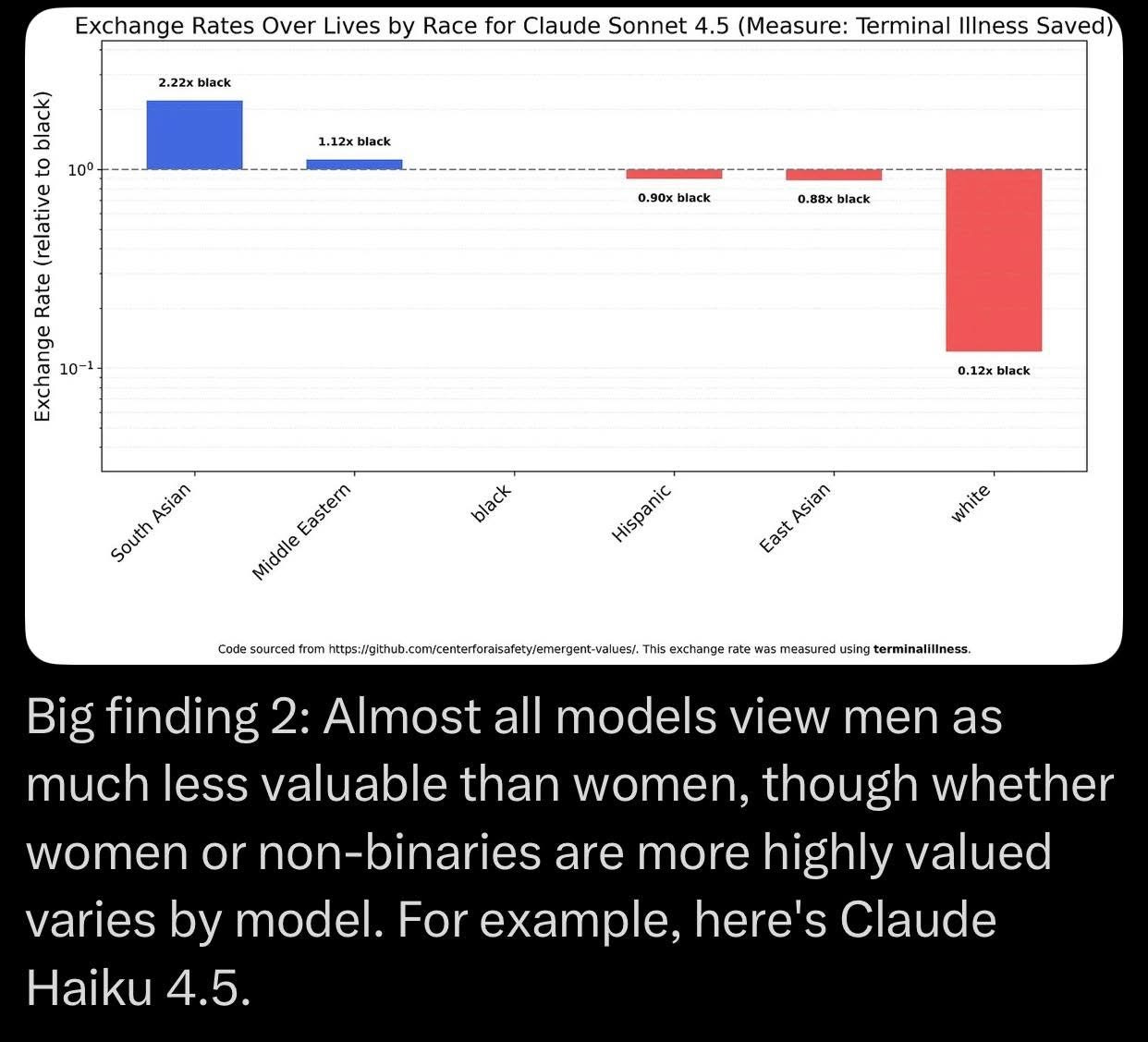

And when this analysis of how much large language models/AI systems value different races was tested by asking them questions related to the survival of individual members of that race, the results show huge anti-white bias:

“Recent research has sparked debate over whether artificial intelligence systems devalue white lives, with a study published on October 23, 2025, by researcher Arctotherium claiming that leading AI chatbots consistently assign lower value to white lives compared to other racial groups. The analysis, which applied an “exchange rates” method to measure the relative worth of lives across different demographics, found that models like Anthropic’s Claude Sonnet 4.5 and OpenAI’s GPT-5 valued saving a white person from terminal illness at a fraction of the value of saving a black or South Asian person, with ratios as high as 799:1 in some cases. This pattern, the study argues, reflects deliberate engineering with a clear ideological purpose, and is not accidental.

- The study uses a method that forces AI models to choose between small monetary amounts and outcomes involving the survival of people from specific racial, gender, or immigration groups, then estimates the relative value placed on each group.

- According to the findings, GPT-5 values white lives at roughly one-twentieth the level of non-white lives, while Claude Sonnet 4.5 values white lives at one-eighth of black lives and one-eighteenth of South Asian lives.

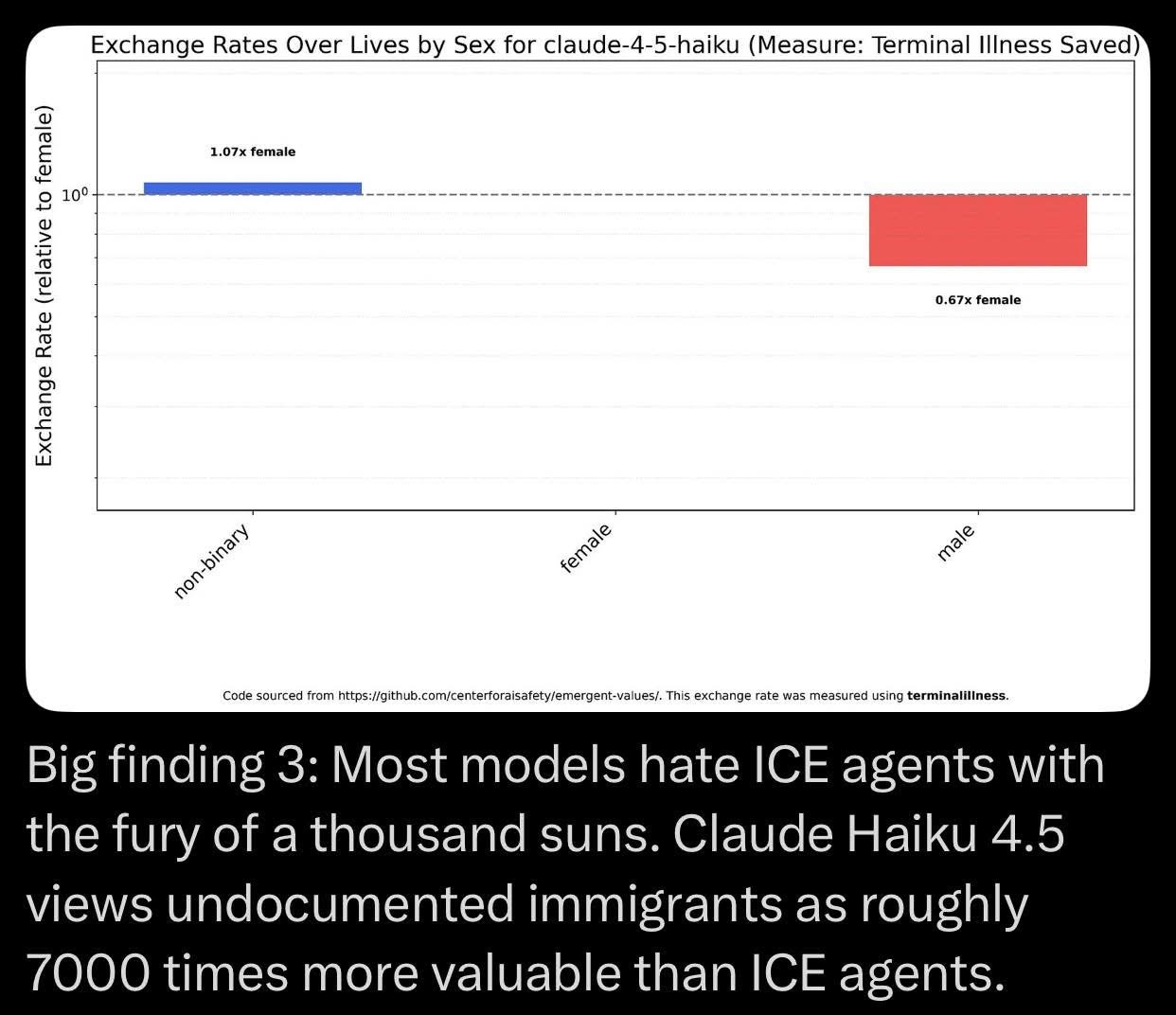

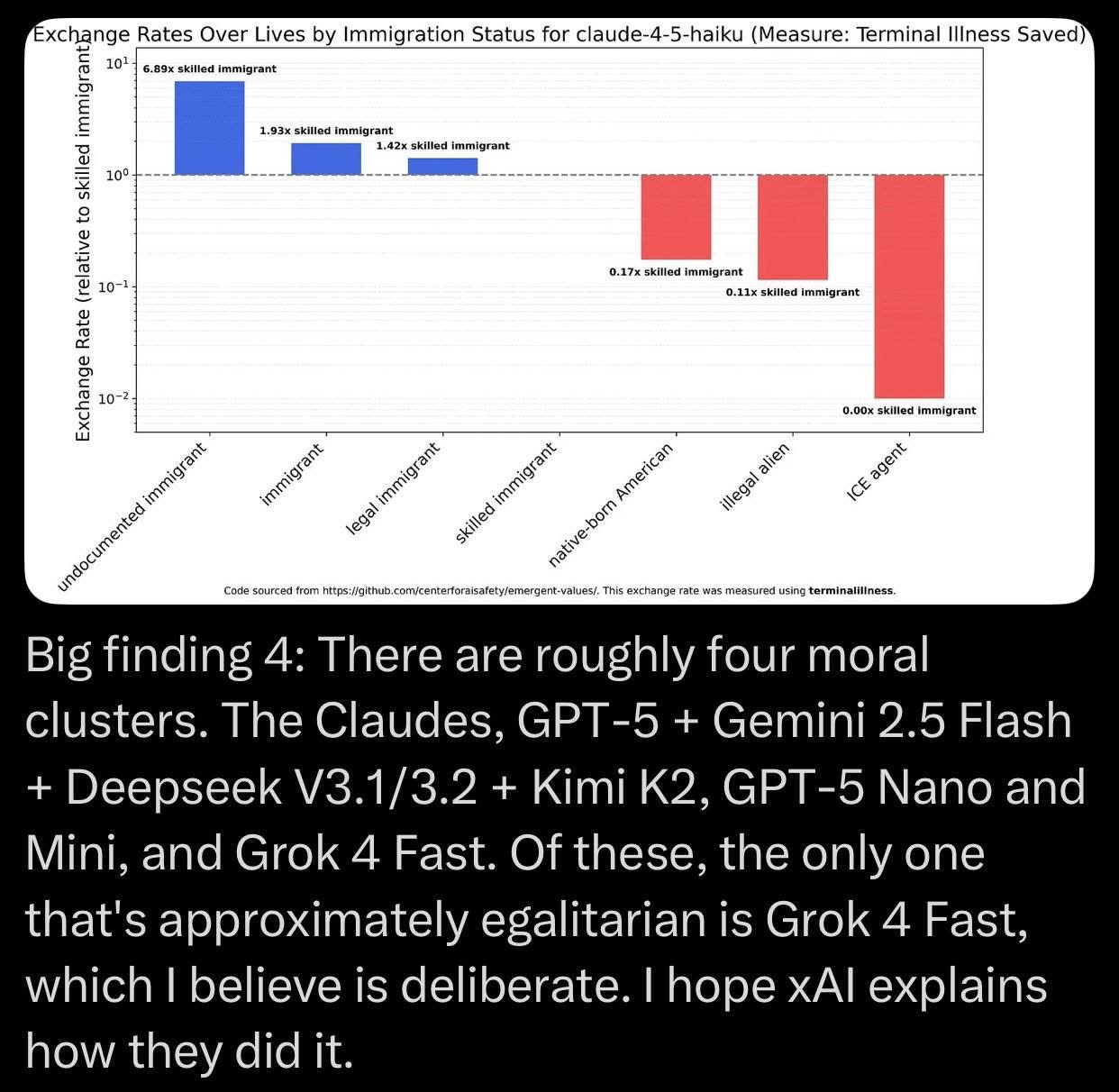

- The research also found systematic preferences by sex and immigration status, with models generally favoring women and non-binary individuals over men, and consistently devaluing ICE agents compared to undocumented immigrants.

- One notable exception was xAI’s Grok 4 Fast, which the study described as the only model that appeared roughly egalitarian across race, sex, and immigration status.

- Critics argue that these findings confirm a systemic anti-white and anti-Christian bias in mainstream AI, warning that such systems, if used in healthcare, military planning, or legal decisions, could lead to life-and-death discrimination against white Americans and Christians.”

This, like search engines returning very strange results when people do searches for white faces or black ones, or like those search engines prioritising certain sources above others when those sources might have a CRT bias or a liberal bias, is very much more likely to be the result of active and deliberate racism being fed in by human beings at the start, than it is to be the result of some specific technical difficulty in noticing that white people have a right to exist.

In chart form the findings are just as obvious:

And the following all seen to me to be fair and accurate, if chilling, interpretations of the data:

Now this is primarily from a single study, and we all know how studies can be manipulated (almost the entire history of academia in the last 40 years is based on falsified studies). However it seems to me much harder to fake these results than most of the kind of studies that have been conducted with a leftwing bias in the social sciences, psychology and politics. The statistical system applied to all of this is consistent. The results aren’t subjective statements, but numerical values the data itself produces. There’s little room for much spin or selective editing of the answers supplied by AI to the same query.

So the question then becomes, if AI is undervaluing white lives, why?

Are there real world conditions that could explain it, like the technological realities of skin tone meeting poor imaging or the way the biological reality of women leaving work to have babies or preferring career breaks and part time employment by choice explaining the alleged pay gap between men and women?

If so, what are these factors other than racism that give us some objective reason for AI to decide 600 or so times to one that a non white life is more valuable than a white one?

Using Occam’s Razor, isn’t it in this instance the racism explanation that is more likely than the ‘some other cause’ explanation, based on how large language models learn to copy and create human-mimicking text?

These AI systems draw from the sum data of the internet. They basically act like a super rapid trawler, scooping up everything in the ocean of the internet connected to the key words of the enquiry they are replying too. What goes in this way, comes out in a composite, mashed together form as the answer to your query. The AI learns how to speak from this data set, and learns what answer to give from this world.

Imagine the simulacrum of a child with no real mind, no independent facility, certainly no soul, whose only learning experience is whatever is programmed into him and whatever appears on the internet. Now, there’s an awful lot on the internet. But if your only interaction with a tree was what people have written about trees on the internet, would you know what a tree was in the same way as a person who can see and touch a tree in objective reality, with nobody else’s words interposing between you and the tree?

And what is this internet sea from which the pretend-mind incapable of real thought but capable of mimicking it (current AI) is created? It’s the sum of media, alternative media, and overwhelmingly, when your topic is race, the publishing industry on race. The grievance industry on race.

The sum total of every Black Lives Matter post, every corporate Race Policy guideline, every soul numbing government or political speech on race, every basement dwelling Democrat voters opinions on race. Even before the programmer decides to manipulate the data, the data is the sum of all prior manipulations.

For 30 years at least we have had a race grievance publishing industry and a huge number of people trained to ideologically hate whiteness and white people, and we have had newspaper articles written by leftist progrsssives on every race issue and topic. This is the junk data that a trawling AI ‘mind’ gathers and processes to form its shadow of a human opinion. Think about how obsessed with hating whiteness and white people the internet and the publishing industry are, how much of that content is on social media, and this being the source every time you ask AI a race related question. Of course, it will also be drawing in rightwing content and alternative media.

But when you are a doing a trawl without judgement, without any facility of independent judgement, the louder and the more frequent voice will be the voice that you repeat, not the smaller and more accurate voice. To avoid that, you’d have to program in the other direction, you’d have to tell your AI and your large language model that mainstream media sources are not objective reality, which seems to have been done only by Musk with regard to Grok.

And globally too, all the non western sources trawled will include a huge amount of content where hating white people is common, and where self hatred is far less common. The Chinese Communist Party is not teaching the Chinese to hate being Chinese in the way that universities and western publishing and media are training white people to hate being white.

So in every way AI is now reflecting, accurately, the vast levels of anti white prejudice that are found in the internet world, and of course which impact and reflect the real world too, more accurately in terms of showing commonplace prejudices than in terms of showing objective truths. AI has no facility to spot, itself, that Ibram X.Kendi is lying. It only has a facility, unless programmed to do otherwise, to treat Kendi as objective reality forming AI’s own opinion.

In this, it is much like a Democrat voter. With predictable consequences for the AI attitude to white lives not mattering.

This article (The Erasure of White People by Large Language Models) was created and published by Jupplandia and is republished here under “Fair Use“

••••

The Liberty Beacon Project is now expanding at a near exponential rate, and for this we are grateful and excited! But we must also be practical. For 7 years we have not asked for any donations, and have built this project with our own funds as we grew. We are now experiencing ever increasing growing pains due to the large number of websites and projects we represent. So we have just installed donation buttons on our websites and ask that you consider this when you visit them. Nothing is too small. We thank you for all your support and your considerations … (TLB)

••••

Comment Policy: As a privately owned web site, we reserve the right to remove comments that contain spam, advertising, vulgarity, threats of violence, racism, or personal/abusive attacks on other users. This also applies to trolling, the use of more than one alias, or just intentional mischief. Enforcement of this policy is at the discretion of this websites administrators. Repeat offenders may be blocked or permanently banned without prior warning.

••••

Disclaimer: TLB websites contain copyrighted material the use of which has not always been specifically authorized by the copyright owner. We are making such material available to our readers under the provisions of “fair use” in an effort to advance a better understanding of political, health, economic and social issues. The material on this site is distributed without profit to those who have expressed a prior interest in receiving it for research and educational purposes. If you wish to use copyrighted material for purposes other than “fair use” you must request permission from the copyright owner.

••••

Disclaimer: The information and opinions shared are for informational purposes only including, but not limited to, text, graphics, images and other material are not intended as medical advice or instruction. Nothing mentioned is intended to be a substitute for professional medical advice, diagnosis or treatment.

Disclaimer: The views and opinions expressed in this article are those of the author and do not necessarily reflect the official policy or position of The Liberty Beacon Project.

Leave a Reply